M. Fouesneau¶

This Notebook shows how to implement a straight line fitting using MCMC and an outlier mixture model.

%%file requirements.txt

numpy

scipy

matplotlib

corner

daft

pystanOverwriting requirements.txt

#@title Install packages { display-mode: "form" }

# !pip install -r requirements.txt --quiet#@title setup notebook { display-mode: "form" }

# Loading configuration

# Don't forget that mac has this annoying configuration that leads

# to limited number of figures/files

# ulimit -n 4096 <---- osx limits to 256

# Notebook matplotlib mode

%pylab inline

# set for retina or hi-resolution displays

%config InlineBackend.figure_format='retina'

light_minimal = {

'font.family': 'serif',

'font.size': 14,

"axes.titlesize": "x-large",

"axes.labelsize": "large",

'axes.edgecolor': '#666666',

"xtick.direction": "out",

"ytick.direction": "out",

"xtick.major.size": "8",

"xtick.minor.size": "4",

"ytick.major.size": "8",

"ytick.minor.size": "4",

'xtick.labelsize': 'small',

'ytick.labelsize': 'small',

'ytick.color': '#666666',

'xtick.color': '#666666',

'xtick.top': False,

'ytick.right': False,

'axes.spines.top': False,

'axes.spines.right': False,

'image.aspect': 'auto'

}

import pylab as plt

import numpy as np

plt.style.use(light_minimal)

from corner import corner

from IPython.display import display, Markdown

from itertools import cycle

from matplotlib.colors import is_color_like

def steppify(x, y):

""" Steppify a curve (x,y). Useful for manually filling histograms """

dx = 0.5 * (x[1:] + x[:-1])

xx = np.zeros( 2 * len(dx), dtype=float)

yy = np.zeros( 2 * len(y), dtype=float)

xx[0::2], xx[1::2] = dx, dx

yy[0::2], yy[1::2] = y, y

xx = np.concatenate(([x[0] - (dx[0] - x[0])], xx, [x[-1] + (x[-1] - dx[-1])]))

return xx, yy

def fast_violin(dataset, width=0.8, bins=None, color=None, which='both',

**kwargs):

""" Make a violin plot with histograms instead of kde """

if bins is None:

a, b = np.nanmin(dataset), np.nanmax(dataset)

d = np.nanstd(dataset, axis=0)

d = np.nanmin(d[d>0])

d /= np.sqrt(dataset.shape[1])

bins = np.arange(a - 1.5 * d, b + 1.5 * d, d)

default_colors = 'C0', 'C1', 'C2', 'C3', 'C4', 'C5', 'C6', 'C7', 'C8', 'C9'

if is_color_like(color):

colors = cycle([color])

elif (color is None):

colors = cycle(default_colors)

elif len(color) != dataset.shape[1]:

colors = cycle(default_colors)

else:

colors = color

for k, color_ in zip(range(dataset.shape[1]), colors):

n, bx = np.histogram(dataset[:, k], bins=bins, **kwargs)

x_ = 0.5 * (bx[1:] + bx[:-1])

y_ = n.astype(float) / n.max()

y0_ = (k + 1) + np.zeros_like(x_)

y1_ = (k + 1) + np.zeros_like(x_)

if which in ('left', 'both'):

y0_ += - 0.5 * width * y_

if which in ('right', 'both'):

y1_ += + 0.5 * width * y_

r = plt.plot(y0_, x_, color=color_)

plt.plot(y1_, x_, color=r[0].get_color())

plt.fill_betweenx(x_, y0_, y1_,

color=r[0].get_color(),

alpha=0.5)

percs_where = np.array([0.16, 0.50, 0.84])

percs_val = np.interp(percs_where, np.cumsum(y_) / np.sum(y_), x_)

plt.plot([k + 1, k + 1],

[percs_val[0], percs_val[2]], '-', color='k')

plt.plot([k + 1],

[percs_val[1]], 'o', color='k')Populating the interactive namespace from numpy and matplotlib

Preliminary discussion around Bayesian statistics¶

As astronomers, we are interested in characterizing uncertainties as much as the point-estimate in our analyses. This means we want to infer the distribution of possible/plausible parameter values.

In the Bayesian context, the Bayes’ theorem, also called chain rule of conditional probabilities, expresses how a degree of belief (prior), expressed as a probability, should rationally change to account for the availability of related evidence.

where and are ensembles of events. ().

- the probability of event occurring given that an event already occured. It is also called the posterior probability of given .

- is the probability of event occurring given that already occured. It is also called the likelihood of A given a fixed B.

- and are the probabilities of observing and independently of the other one. They are known as the marginal probabilities or prior probabilities.

We note that in this equation we can swap and . The Bayesian framework only brings interpretations. One can see it as a knowledge refinement: given what I know about (e.g, gravity, stellar evolution, chemistry) what can we learn with new events .

A note about the proof: The equation derives from a simple observation (geometry/ensemble theory).

The figure below gives the visual support

#@title Baye's theorem figure { display-mode: "form" }

from matplotlib.patches import Ellipse, Rectangle

ax = plt.subplot(111)

patches = [

Ellipse((-1, 0), 2, 3, angle=30, facecolor='0.5', edgecolor='k', alpha=0.1),

Ellipse((-1, 0), 2, 3, angle=30, facecolor='None', edgecolor='k'),

Ellipse((1, 0), 3, 2, angle=15, facecolor='0.5', edgecolor='k', alpha=0.1),

Ellipse((1, 0), 3, 2, angle=15, facecolor='None', edgecolor='k'),

Rectangle((-3, -3), 6, 6, facecolor='None', edgecolor='k')

]

for patch in patches:

ax.add_patch(patch)

plt.text(-2.8, 2.8, r'$\Omega$', va='top', ha='left', color='k')

plt.text(-1, 0, 'A', va='top', ha='left', color='k')

plt.text(1, 0, 'B', va='top', ha='left', color='k')

plt.annotate(r'A$\cap$B', (-0.2, 0.),

xycoords='data',

arrowprops=dict(facecolor='black', arrowstyle="->"),

horizontalalignment='center', verticalalignment='bottom',

xytext=(0.5, 1.5))

plt.xlim(-3, 3)

plt.ylim(-3, 3)

ax.get_xaxis().set_visible(False)

ax.get_yaxis().set_visible(False)

For any event from $$

$\Omega$ but it is commonly omitted. The final equation of the Bayes’ theorem derives from the rearanging the terms of the right hand-side equalities.

The equation itself is most commonly attributed to reverand Thomas Bayes in 1763, but also to Pierre-Simon de Laplace. However, the current interpretation and usage significantly expanded from its initial formulation.

Straight line problem¶

In astronomy, we commonly have to fit a model to data. A very typical example is the fit of a straight line to a set of points in a two-dimensional plane. Astronomers are very good at finding a representation or a transformation of their data in which they can identify linear correlations (e.g., plotting in log-log space, action-angles for orbits, etc). Consequently, a polynomial remains a “line” in a more complicated representation space.

In this exercise, we consider a dataset with uncertainties along one axis only for simplicity, i.e. uncertainties along x negligible. These conditions are rarely met in practice but the principles remain similar. However, we also explore the outlier problem: bad data are very common in astronomy.

We emphasize the importance of having a “generative model” for the data, even an approximate one. Once there is a generative model, we have a direct computation of the likelihood of the parameters or the posterior probability distribution.

Problem definition¶

We will fit a linear model to a dataset

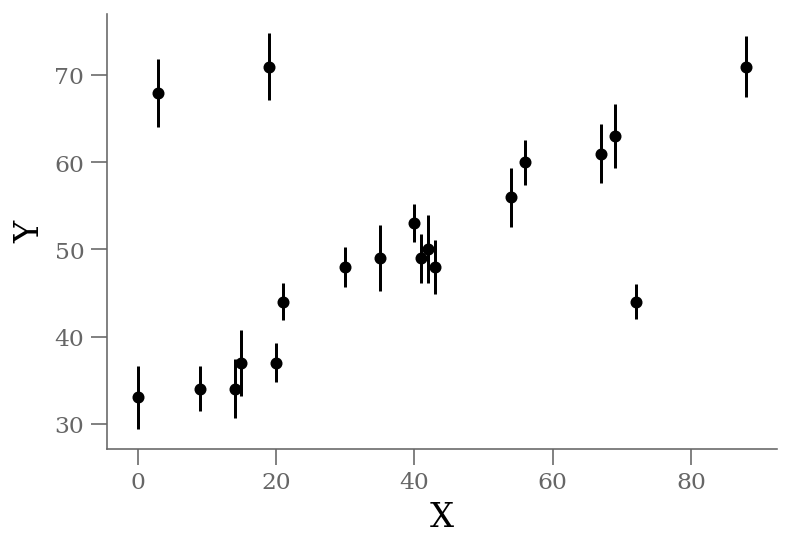

Let’s start by looking at the data.

Generate dataset: Straight line with outliers¶

#@title Generate data { display-mode: "form" }

x = np.array([ 0, 3, 9, 14, 15, 19, 20, 21, 30, 35,

40, 41, 42, 43, 54, 56, 67, 69, 72, 88])

y = np.array([33, 68, 34, 34, 37, 71, 37, 44, 48, 49,

53, 49, 50, 48, 56, 60, 61, 63, 44, 71])

sy = np.array([ 3.6, 3.9, 2.6, 3.4, 3.8, 3.8, 2.2, 2.1, 2.3, 3.8,

2.2, 2.8, 3.9, 3.1, 3.4, 2.6, 3.4, 3.7, 2.0, 3.5])

np.savetxt('line_outlier.dat', np.array([x, y, sy]).T)x, y, sy = np.loadtxt('line_outlier.dat', unpack=True)

plt.errorbar(x, y, yerr=sy, marker='o', ls='None', ms=5, color='k');

plt.xlabel('X');

plt.ylabel('Y');

We see three outliers, but there is an obvious linear relation between the points overall.

Blind fit: no outlier model¶

Let’s see what happens if we fit the data without any outlier consideration.

Equations¶

We would like to fit a linear model to this above dataset:

We commonly start with the Bayes’s rule:

Hence we need to define our prior and likelihood

Given this model and the gaussian uncertainties on our data, we can compute a Gaussian likelihood for each point:

Note that is on the right hand side of the . It is because we assume given the Gaussian uncertainties when we write the likelihood that way.

The total likelihood is the product of all the individual likelihoods (as we assume independent measurements).

For numerical stability reasons, it is preferable to take the log-likelihood of the data. We have:

The posterior is the likelihood times the prior distributions. Let’s assume from the inspection of the data that , we can write

and

(The upper value is abitrary.)

Coding¶

Here we use PyStan.

The PyStan project is the official Python wrapper of the Stan Probabilistic programming language, which is implemented in C++. It uses a No U-Turn Sampler (which is also in PyMC). As far as API goes, the important difference between PyStan as compared to emcee and PyMC is that it requires you to write and compile non-Python code within your Python script when defining your model.

Because PyStan depends on the Stan package, installation can be difficult. Although I only did pip install pystan and it seemed to work just fine on my system.

import numpy as np

# pystan 3 bug fixing

# RuntimeError: asyncio.run() cannot be called from a running event loop

import nest_asyncio

nest_asyncio.apply()

import stan

#----------------------------------------------

# Create the Stan model

# this is done by defining a string of Stan code.

fit_code = """

data {

int<lower=0> N; // number of points

real x[N]; // x values

real y[N]; // y values

real sy[N]; // y uncertainties

}

parameters {

real alpha;

real beta;

}

transformed parameters {

real ymodel[N];

for (j in 1:N)

ymodel[j] = beta + alpha * x[j];

}

model {

alpha ~ uniform(0, 100);

beta ~ uniform(0, 100);

y ~ normal(ymodel, sy);

}

"""

# perform the fit

guess = {'alpha': 0.45, 'beta': 30}

fit_data = {'N': len(x), 'x': x, 'y': y, 'sy': sy}

posterior = stan.build(fit_code, data=fit_data, random_seed=1)

traces_ols = posterior.sample(num_chains=4, num_samples=25000, init=[guess] * 4)

# extract the traces

traces = [traces_ols['alpha'], traces_ols['beta']]Building...

Building: 36.0s, done.Messages from stanc:

Warning in '/tmp/httpstan_78geviow/model_4me2ds6b.stan', line 22, column 22: Argument 100 suggests there may be parameters that are not unit scale; consider rescaling with a multiplier (see manual section 22.12).

Warning in '/tmp/httpstan_78geviow/model_4me2ds6b.stan', line 22, column 4: Parameter beta is given a uniform distribution. The uniform distribution is not recommended, for two reasons: (a) Except when there are logical or physical constraints, it is very unusual for you to be sure that a parameter will fall inside a specified range, and (b) The infinite gradient induced by a uniform density can cause difficulties for Stan's sampling algorithm. As a consequence, we recommend soft constraints rather than hard constraints; for example, instead of giving an elasticity parameter a uniform(0,1) distribution, try normal(0.5,0.5).

Warning in '/tmp/httpstan_78geviow/model_4me2ds6b.stan', line 21, column 23: Argument 100 suggests there may be parameters that are not unit scale; consider rescaling with a multiplier (see manual section 22.12).

Warning in '/tmp/httpstan_78geviow/model_4me2ds6b.stan', line 21, column 4: Parameter alpha is given a uniform distribution. The uniform distribution is not recommended, for two reasons: (a) Except when there are logical or physical constraints, it is very unusual for you to be sure that a parameter will fall inside a specified range, and (b) The infinite gradient induced by a uniform density can cause difficulties for Stan's sampling algorithm. As a consequence, we recommend soft constraints rather than hard constraints; for example, instead of giving an elasticity parameter a uniform(0,1) distribution, try normal(0.5,0.5).

Sampling: 0%

Sampling: 25% (26000/104000)

Sampling: 50% (52000/104000)

Sampling: 75% (78000/104000)

Sampling: 100% (104000/104000)

Sampling: 100% (104000/104000), done.

Messages received during sampling:

Gradient evaluation took 1.6e-05 seconds

1000 transitions using 10 leapfrog steps per transition would take 0.16 seconds.

Adjust your expectations accordingly!

Gradient evaluation took 1.1e-05 seconds

1000 transitions using 10 leapfrog steps per transition would take 0.11 seconds.

Adjust your expectations accordingly!

Gradient evaluation took 1e-05 seconds

1000 transitions using 10 leapfrog steps per transition would take 0.1 seconds.

Adjust your expectations accordingly!

Gradient evaluation took 6e-06 seconds

1000 transitions using 10 leapfrog steps per transition would take 0.06 seconds.

Adjust your expectations accordingly!

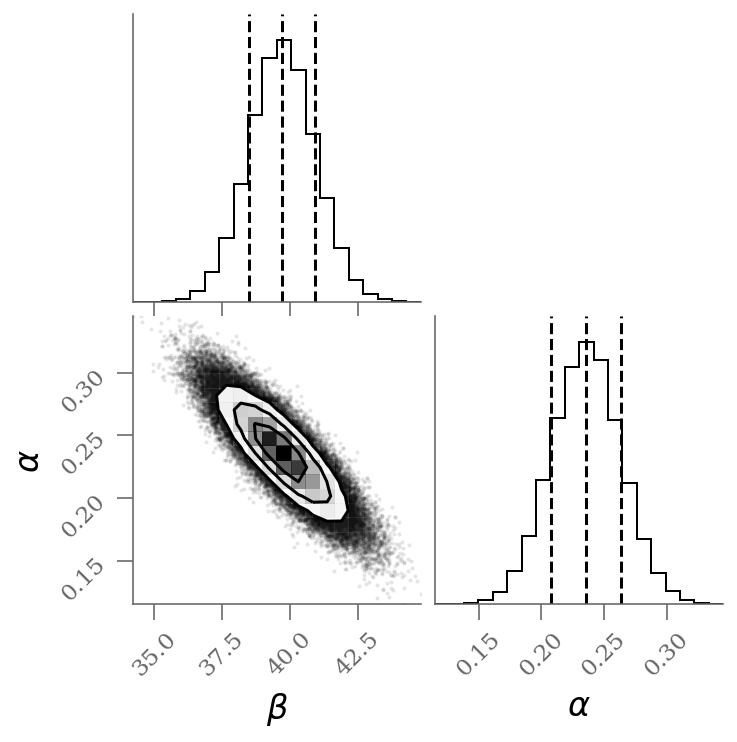

varnames = 'beta', 'alpha'

sample = np.array([traces_ols[k].ravel() for k in varnames]).T

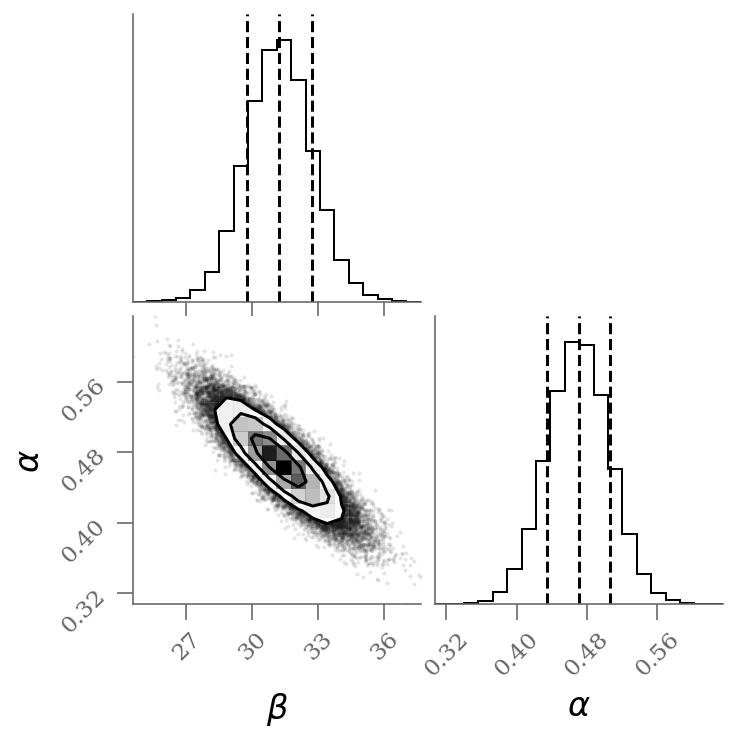

corner(sample, labels=(r'$\beta$', r'$\alpha$'), quantiles=(0.16, 0.50, 0.84));

x, y, sy = np.loadtxt('line_outlier.dat', unpack=True)

plt.errorbar(x, y, yerr=sy, marker='o', ls='None', ms=5, color='k');

plt.xlabel('X');

plt.ylabel('Y');

ypred_blind = sample[:, 0] + sample[:, 1] * x[:, None]

plt.plot(x, ypred_blind[:, :100], color='C0', rasterized=True, alpha=0.3, lw=0.3)

percs_blind = np.percentile(sample, [16, 50, 84], axis=0)

display(Markdown(r"""Without outlier modeling:

* $\alpha$ = {1:0.3g} [{0:0.3g}, {2:0.3g}]

* $\beta$ = {4:0.3g} [{3:0.3g}, {5:0.3g}]

""".format(*percs_blind.T.ravel())

))It’s clear from this plot that the outliers exerts a disproportionate influence on the fit.

This is due to the nature of our likelihood function. One outlier that is, say (standard deviations) away from the fit will out-weight the contribution of points which are only away.

In conclusion, least-square likelihoods are overly sensitive to outliers, and this is causing issues with our fit.

One way to address this is to simply model the outliers.

Mixture Model¶

The Bayesian approach to accounting for outliers generally involves mixture models so that the initial model is combined with a complement model accounting for the outliers:

where is the mixing coefficient (between 0 and 1), and the probabilities of being an inlier and outlier, respectively.

So let’s propose a more complicated model that is a mixture between a signal and a background

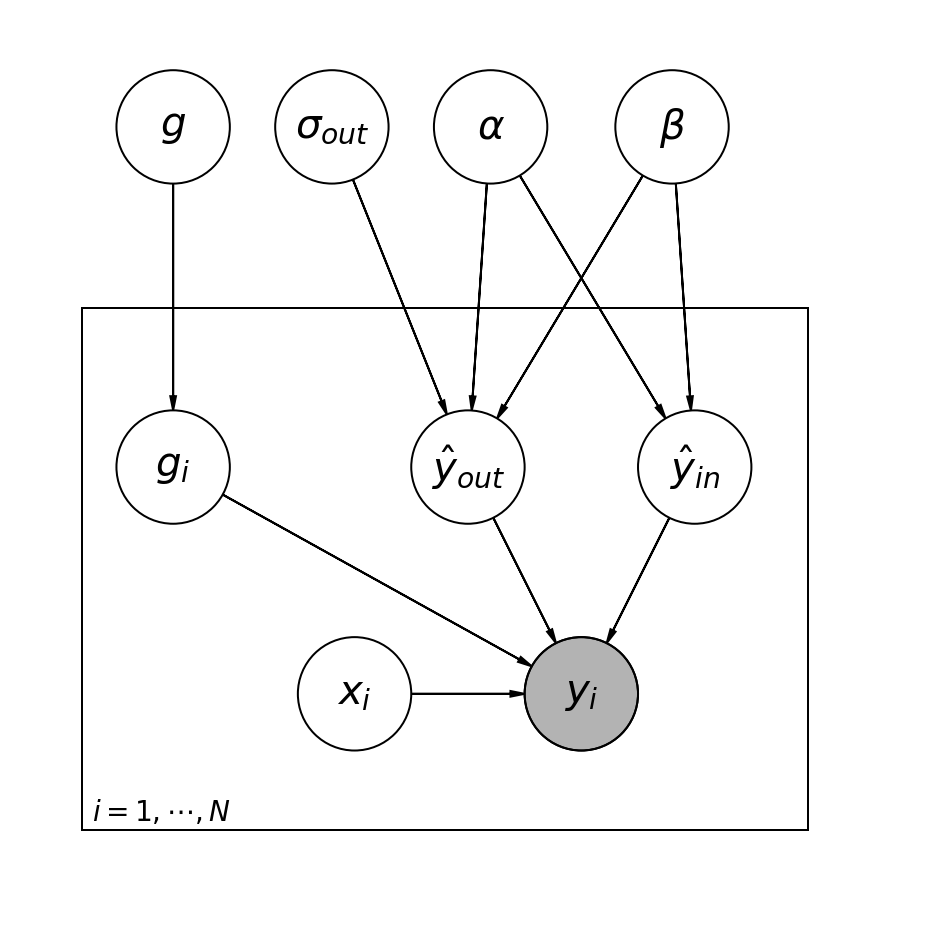

Graphical model¶

A short parenthesis on probabilistic programming. One way to represent the above model is through probabilistic graphical models (pgm).

Using daft a python package, I draw a (inaccurate) pgm of the straight line model with outliers.

import daft

# Instantiate the PGM.

pgm = daft.PGM([4, 4], origin=[-0.5, -0.0], grid_unit=4,

node_unit=2, label_params=dict(fontsize='x-large'))

# Hierarchical parameters.

pgm.add_node(daft.Node("sout", r"$\sigma_{out}$", 0.9, 3.5, fixed=False))

pgm.add_node(daft.Node("g", r"$g$", 0.2, 3.5, fixed=False))

pgm.add_node(daft.Node("beta", r"$\beta$", 2.4, 3.5))

pgm.add_node(daft.Node("alpha", r"$\alpha$", 1.6, 3.5))

# Latent variable.

pgm.add_node(daft.Node("x", r"$x_i$", 1, 1))

pgm.add_node(daft.Node("gn", r"$g_i$", 0.2, 2, observed=False))

pgm.add_node(daft.Node("yin", r"$\hat{y}_{in}$", 2.5, 2, observed=False))

pgm.add_node(daft.Node("yout", r"$\hat{y}_{out}$", 1.5, 2, observed=False))

# Data.

pgm.add_node(daft.Node("y", r"$y_i$", 2, 1, observed=True))

# And a plate.

pgm.add_plate(daft.Plate([-0.2, 0.5, 3.2, 2.2],

label=r"$i = 1, \cdots, N$", shift=-0.1,

bbox={"color": "none"}))

# add relations

pgm.add_edge("x", "y")

pgm.add_edge("alpha", "yin")

pgm.add_edge("beta", "yin")

pgm.add_edge("alpha", "yout")

pgm.add_edge("beta", "yout")

pgm.add_edge("sout", "yout")

pgm.add_edge("g", "gn")

pgm.add_edge("gn", "y")

pgm.add_edge("yin", "y")

pgm.add_edge("yout", "y")

pgm.render();

There are multiple ways to define this mixture modeling.

Brutal version: 1 parameter per datapoint¶

corresponds to the previous likelihood.

is arbitrary as we do not really have information about the causes for the outliers. We assume a similar Gaussian form centered on the affine relation but with a significantly larger dispersion. Doing so is intuitively considering the distance to the line to decide whether we have an outlier or not (this is intuitively a Bayesian version of sigma-clipping)

It is important to note that the total likelihood is a product of sums:

Hence, we need to be careful to properly transform the into during the calculations (see: np.logsumexp)

Hence the likelihood becomes explicitly

We “simply” expanded our model with 20 nuisance parameters: is a series of weights, which range from 0 to 1 and encode for each point the degree to which it fits the model.

indicates an outlier, in which case a Gaussian of width is used in the computation of the likelihood. This can also be a nuisance parameter. However, for this example, we fix to an arbitrary large value (large compared with the dispersion of the data)

Note that we have a prior that all must be strictly between 0 and 1.

We now make the probabilistic equations that link the various variables together:

We would like to fit a linear model to this above dataset:

One must provide some prior to properly implement the model. Let’s suppose weak informative normal priors (they correspond to a Ridge regression context)

We also have the outlier component

where is unknown but positively constrained (half-Normal prior)

And each data point has a probability (0 or 1) to be an outlier or not with a probability

(this is of course, sub-optimal)

Our final model is a mixture of the two components:

We code the model as:

fit_code = """

data {

int<lower=0> N; // number of points

real x[N]; // x values

real y[N]; // y values

real sy[N]; // y uncertainties

}

parameters {

real alpha;

real beta;

real<lower=0, upper=1> is_outlier[N];

}

transformed parameters {

real yin[N];

real sigma_y_out = 100.;

for (j in 1:N){

yin[j] = beta + alpha * x[j];

}

}

model {

alpha ~ uniform(0, 100);

beta ~ uniform(0, 100);

is_outlier ~ uniform(0, 1);

for (n in 1:N){

target += log_sum_exp(log(1 - is_outlier[n])

+ normal_lpdf(y[n] | yin[n], sy[n]),

log(is_outlier[n])

+ normal_lpdf(y[n] | yin[n], sigma_y_out));

}

}

"""

# perform the fit

guess = {'alpha': 0.45, 'beta': 30, 'frac_outliers': 0.3}

fit_data = {'N': len(x), 'x': x, 'y': y, 'sy': sy}

posterior = stan.build(fit_code, data=fit_data, random_seed=1)

traces_signoise = posterior.sample(num_chains=2, num_samples=25000, init=[guess] * 2)Building...

Building: 39.0s, done.Messages from stanc:

Warning in '/tmp/httpstan_r33qx58g/model_ah6ap5ar.stan', line 26, column 22: Argument 100 suggests there may be parameters that are not unit scale; consider rescaling with a multiplier (see manual section 22.12).

Warning in '/tmp/httpstan_r33qx58g/model_ah6ap5ar.stan', line 26, column 4: Parameter beta is given a uniform distribution. The uniform distribution is not recommended, for two reasons: (a) Except when there are logical or physical constraints, it is very unusual for you to be sure that a parameter will fall inside a specified range, and (b) The infinite gradient induced by a uniform density can cause difficulties for Stan's sampling algorithm. As a consequence, we recommend soft constraints rather than hard constraints; for example, instead of giving an elasticity parameter a uniform(0,1) distribution, try normal(0.5,0.5).

Warning in '/tmp/httpstan_r33qx58g/model_ah6ap5ar.stan', line 25, column 23: Argument 100 suggests there may be parameters that are not unit scale; consider rescaling with a multiplier (see manual section 22.12).

Warning in '/tmp/httpstan_r33qx58g/model_ah6ap5ar.stan', line 25, column 4: Parameter alpha is given a uniform distribution. The uniform distribution is not recommended, for two reasons: (a) Except when there are logical or physical constraints, it is very unusual for you to be sure that a parameter will fall inside a specified range, and (b) The infinite gradient induced by a uniform density can cause difficulties for Stan's sampling algorithm. As a consequence, we recommend soft constraints rather than hard constraints; for example, instead of giving an elasticity parameter a uniform(0,1) distribution, try normal(0.5,0.5).

Sampling: 0%

Sampling: 0% (1/52000)

Sampling: 0% (2/52000)

Sampling: 0% (101/52000)

Sampling: 1% (401/52000)

Sampling: 1% (600/52000)

Sampling: 2% (1100/52000)

Sampling: 52% (26900/52000)

Sampling: 100% (52000/52000)

Sampling: 100% (52000/52000), done.

Messages received during sampling:

Gradient evaluation took 2.8e-05 seconds

1000 transitions using 10 leapfrog steps per transition would take 0.28 seconds.

Adjust your expectations accordingly!

Gradient evaluation took 2.7e-05 seconds

1000 transitions using 10 leapfrog steps per transition would take 0.27 seconds.

Adjust your expectations accordingly!

varnames = 'beta', 'alpha'

sample = np.array([traces_signoise[k].ravel() for k in varnames]).T

corner(sample[:, :], labels=(r'$\beta$', r'$\alpha$', r'1-q'), quantiles=(0.16, 0.50, 0.84));

We see a distribution of points near a slope of , and an intercept of . We’ll plot this model over the data below, but first let’s see what other information we can extract from this trace.

One nice feature of analyzing MCMC samples is that the choice of nuisance parameters is completely irrelevant during the sampling.

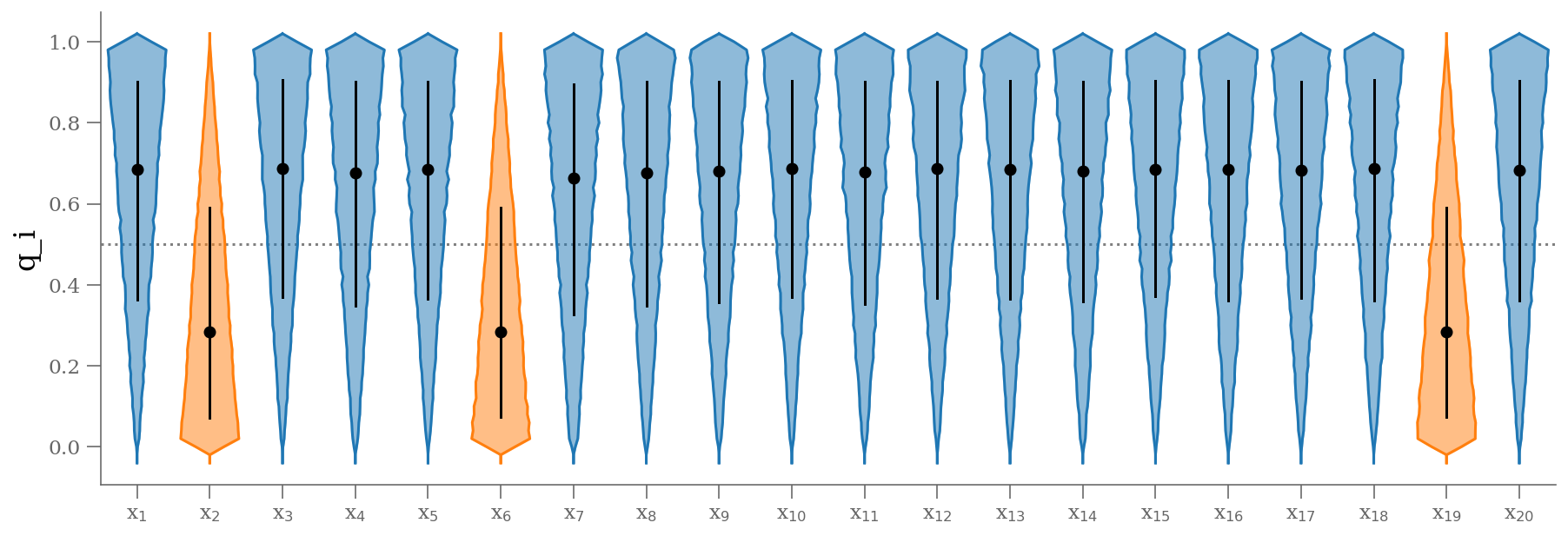

samples_q = 1 - traces_signoise['is_outlier'].T

plt.figure(figsize=(15, 5))

colors = np.where(np.median(samples_q, axis=0) < 0.5, 'C1', 'C0')

fast_violin(samples_q, color=colors, bins=np.arange(-0.05, 1.05, 0.02))

ticks = (np.arange(samples_q.shape[1]) + 1)

lbls = [r'x$_{{{0:d}}}$'.format(k) for k in (ticks).astype(int)]

plt.xticks(ticks, lbls)

plt.hlines([0.5], 0.5, len(ticks) + 0.5, color='0.5', zorder=-100, ls=':')

plt.xlim(0.5, len(ticks) + 0.5)

plt.ylabel('q_i');

q = np.median(samples_q, 0)

outliers = (q <= 0.5) # arbitrary choice

n_outliers = sum(outliers)

x, y, sy = np.loadtxt('line_outlier.dat', unpack=True)

plt.errorbar(x, y, yerr=sy, marker='o', ls='None', ms=5, color='k');

ypred_brute = sample[:, 0] + sample[:, 1] * x[:, None]

plt.plot(x, ypred_blind[:, :100], color='C0', rasterized=True, alpha=0.3, lw=0.3)

plt.plot(x, ypred_brute[:, :100], color='C1', rasterized=True, alpha=0.3, lw=0.3)

plt.plot(x[outliers], y[outliers], 'ro',

ms=20, mec='red', mfc='red', zorder=1, alpha=0.4)

plt.xlabel('X');

plt.ylabel('Y');

display(Markdown(r"""Without outlier modeling:

* $\alpha$ = {1:0.3g} [{0:0.3g}, {2:0.3g}]

* $\beta$ = {4:0.3g} [{3:0.3g}, {5:0.3g}]

""".format(*percs_blind.T.ravel())

))

percs_brute = np.percentile(sample[:, :2], [16, 50, 84], axis=0)

display(Markdown(r"""With outlier modeling: 22 parameters

* $\alpha$ = {1:0.3g} [{0:0.3g}, {2:0.3g}]

* $\beta$ = {4:0.3g} [{3:0.3g}, {5:0.3g}]

* number of outliers: ${6:d}$

""".format(*percs_brute.T.ravel(), n_outliers)

))The result shown in orange matches our intuition. In addition, the points automatically identified as outliers are the ones we would identify by eye to be suspicious. The blue shaded region indicates the previous “blind” result.

The smarter version¶

This previous model of outliers takes a simple linear model of 2 parameters and transforms it into a parameters, being the number of datapoints. This leads to 22 parameters in our case. What happens if you have 200 data points?

The problem with the previous model was that it adds one parameter for each data point, which not only makes the fitting expensive, but also makes the problem untracktable very quickly.

Based on the same formulation, we can consider one global instead individuals, which will characterize on the ensemble the probability of having an outlier. In other words, the fraction of outliers relative to the dataset.

with

We simply have 1 nuisance parameters: which ranges from 0 to 1.

Similarly to the previous model, indicates an outlier, in which case a Gaussian of width is used in the computation of the likelihood. This can also be a nuisance parameter.

From this model, we can estimate the odds of being an outlier with

Our likelihood can be expressed directly with tensor operations (otherwise we’d need to use theano’s @as_op

decorator). This means we get the likelihood gradient for free thanks to the Theano backend, which makes the MCMC sampler more efficient (HMC methods)

We now make the probabilistic equations that link the various variables together:

We would like to fit a linear model to this above dataset:

One must provide some prior to properly implement the model. Let’s suppose weak informative normal priors (they correspond to a Ridge regression context)

We also have the outlier component

where is unknown but positively constrained (half-Normal prior)

sets the ratio of inliers to outliers. Given our setup, it corresponds to the fraction of outliers in our model. One can set a wealky informative prior on as

(hopefully we do not have more than half of the data being made of outliers)

Our final model is a mixture of the two components:

we code the probabilistic model as follow (using DensityDist to set the final mixture likelihood)

fit_code = """

data {

int<lower=0> N; // number of points

real x[N]; // x values

real y[N]; // y values

real sy[N]; // y uncertainties

}

parameters {

real alpha;

real beta;

real frac_outliers;

}

transformed parameters {

real yin[N];

real log_frac_out;

real log_frac_in;

real is_outlier[N];

real lnp_in[N];

real lnp_out[N];

real lnp_tot[N];

real sigma_y_out = 100;

log_frac_in = log(1 - frac_outliers);

log_frac_out = log(frac_outliers);

for (j in 1:N){

yin[j] = beta + alpha * x[j];

lnp_in[j] = normal_lpdf(y[j] | yin[j], sy[j]);

lnp_out[j] = normal_lpdf(y[j] | yin[j], sigma_y_out);

lnp_tot[j] = log_sum_exp(log_frac_in + lnp_in[j], log_frac_out + lnp_out[j]);

is_outlier[j] = exp(log_frac_out + lnp_out[j]) / exp(lnp_tot[j]);

}

}

model {

// real val_n;

alpha ~ uniform(0, 100);

beta ~ uniform(0, 100);

frac_outliers ~ uniform(1e-4, 0.5);

for (n in 1:N){

target += lnp_tot[n];

}

}

"""

# perform the fit

guess = {'alpha': 0.45, 'beta': 30, 'frac_outliers': 0.3}

fit_data = {'N': len(x), 'x': x, 'y': y, 'sy': sy}

posterior = stan.build(fit_code, data=fit_data, random_seed=1)

traces_smart = posterior.sample(num_chains=4, num_samples=25000, init=[guess] * 4)Building...

Building: 36.2s, done.Messages from stanc:

Warning in '/tmp/httpstan_2fcgtd2c/model_5sfiv625.stan', line 42, column 28: Argument 1e-4 suggests there may be parameters that are not unit scale; consider rescaling with a multiplier (see manual section 22.12).

Warning in '/tmp/httpstan_2fcgtd2c/model_5sfiv625.stan', line 42, column 4: Parameter frac_outliers is given a uniform distribution. The uniform distribution is not recommended, for two reasons: (a) Except when there are logical or physical constraints, it is very unusual for you to be sure that a parameter will fall inside a specified range, and (b) The infinite gradient induced by a uniform density can cause difficulties for Stan's sampling algorithm. As a consequence, we recommend soft constraints rather than hard constraints; for example, instead of giving an elasticity parameter a uniform(0,1) distribution, try normal(0.5,0.5).

Warning in '/tmp/httpstan_2fcgtd2c/model_5sfiv625.stan', line 41, column 22: Argument 100 suggests there may be parameters that are not unit scale; consider rescaling with a multiplier (see manual section 22.12).

Warning in '/tmp/httpstan_2fcgtd2c/model_5sfiv625.stan', line 41, column 4: Parameter beta is given a uniform distribution. The uniform distribution is not recommended, for two reasons: (a) Except when there are logical or physical constraints, it is very unusual for you to be sure that a parameter will fall inside a specified range, and (b) The infinite gradient induced by a uniform density can cause difficulties for Stan's sampling algorithm. As a consequence, we recommend soft constraints rather than hard constraints; for example, instead of giving an elasticity parameter a uniform(0,1) distribution, try normal(0.5,0.5).

Warning in '/tmp/httpstan_2fcgtd2c/model_5sfiv625.stan', line 40, column 23: Argument 100 suggests there may be parameters that are not unit scale; consider rescaling with a multiplier (see manual section 22.12).

Warning in '/tmp/httpstan_2fcgtd2c/model_5sfiv625.stan', line 40, column 4: Parameter alpha is given a uniform distribution. The uniform distribution is not recommended, for two reasons: (a) Except when there are logical or physical constraints, it is very unusual for you to be sure that a parameter will fall inside a specified range, and (b) The infinite gradient induced by a uniform density can cause difficulties for Stan's sampling algorithm. As a consequence, we recommend soft constraints rather than hard constraints; for example, instead of giving an elasticity parameter a uniform(0,1) distribution, try normal(0.5,0.5).

Sampling: 0%

Sampling: 0% (100/104000)

Sampling: 0% (400/104000)

Sampling: 25% (26400/104000)

Sampling: 50% (52300/104000)

Sampling: 75% (78000/104000)

Sampling: 100% (104000/104000)

Sampling: 100% (104000/104000), done.

Messages received during sampling:

Gradient evaluation took 4.9e-05 seconds

1000 transitions using 10 leapfrog steps per transition would take 0.49 seconds.

Adjust your expectations accordingly!

Gradient evaluation took 4.5e-05 seconds

1000 transitions using 10 leapfrog steps per transition would take 0.45 seconds.

Adjust your expectations accordingly!

Gradient evaluation took 1.2e-05 seconds

1000 transitions using 10 leapfrog steps per transition would take 0.12 seconds.

Adjust your expectations accordingly!

Gradient evaluation took 2.8e-05 seconds

1000 transitions using 10 leapfrog steps per transition would take 0.28 seconds.

Adjust your expectations accordingly!

varnames = 'beta', 'alpha', 'frac_outliers'

sample = np.array([traces_smart[k].ravel() for k in varnames]).T

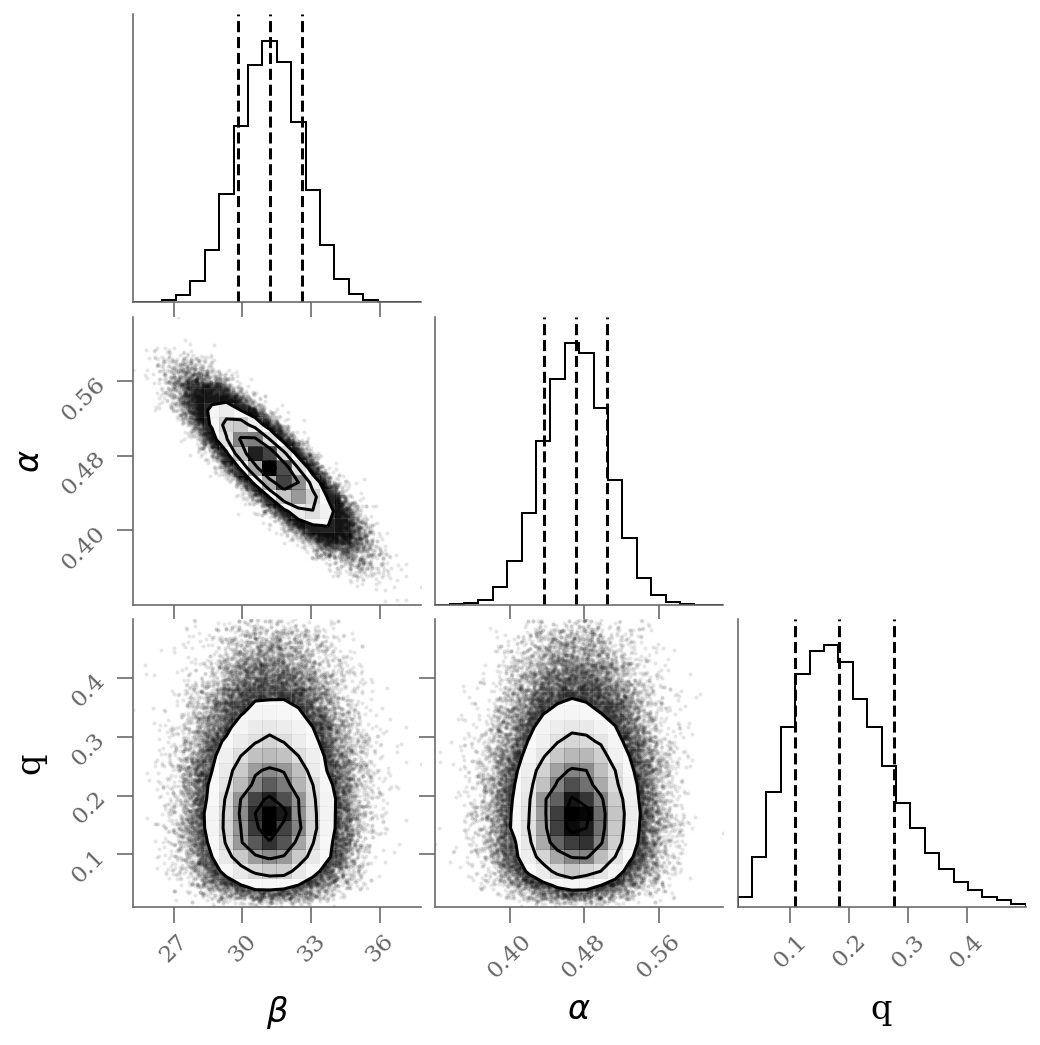

corner(sample[:, :], labels=(r'$\beta$', r'$\alpha$', r'q'), quantiles=(0.16, 0.50, 0.84));

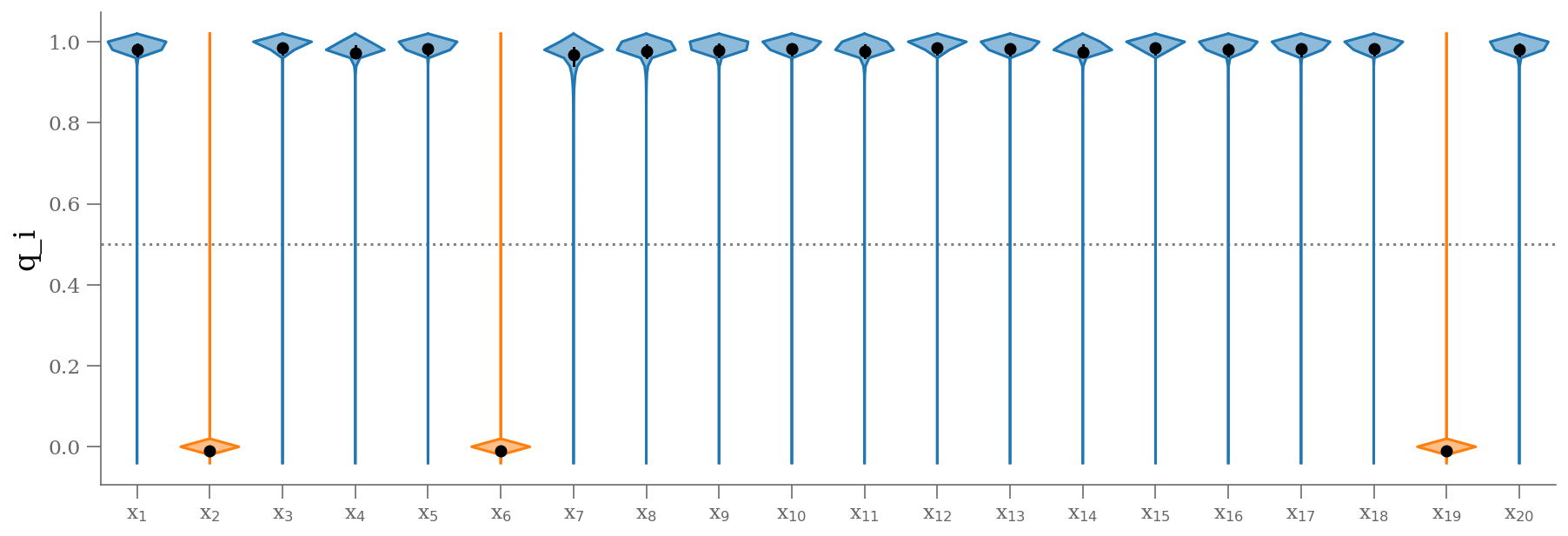

samples_q = 1 - traces_smart['is_outlier'].T

plt.figure(figsize=(15, 5))

colors = np.where(np.median(samples_q, axis=0) < 0.5, 'C1', 'C0')

fast_violin(samples_q, color=colors, bins=np.arange(-0.05, 1.05, 0.02))

ticks = (np.arange(samples_q.shape[1]) + 1)

lbls = [r'x$_{{{0:d}}}$'.format(k) for k in (ticks).astype(int)]

plt.xticks(ticks, lbls)

plt.hlines([0.5], 0.5, len(ticks) + 0.5, color='0.5', zorder=-100, ls=':')

plt.xlim(0.5, len(ticks) + 0.5)

plt.ylabel('q_i');

outliers = np.median(traces_smart['is_outlier'], axis=1) >= 0.5

n_outliers = sum(outliers)

x, y, sy = np.loadtxt('line_outlier.dat', unpack=True)

plt.errorbar(x, y, yerr=sy, marker='o', ls='None', ms=5, color='k');

ypred_smart = sample[:, 0] + sample[:, 1] * x[:, None]

plt.plot(x, ypred_blind[:, :100], color='C0',

rasterized=True, alpha=0.3, lw=0.3, zorder=-3)

plt.plot(x, ypred_brute[:, :1000], color='C1',

rasterized=True, alpha=0.3, lw=0.3, zorder=-2)

plt.plot(x, ypred_smart[:, :1000], color='C2',

rasterized=True, alpha=0.3, lw=0.3, zorder=-1)

plt.plot(x[outliers], y[outliers], 'ro',

ms=20, mec='red', mfc='red', zorder=1, alpha=0.4)

plt.xlabel('X');

plt.ylabel('Y');

display(Markdown(r"""Without outlier modeling (blue):

* $\alpha$ = {1:0.3g} [{0:0.3g}, {2:0.3g}]

* $\beta$ = {4:0.3g} [{3:0.3g}, {5:0.3g}]

""".format(*percs_blind.T.ravel())

))

display(Markdown(r"""With outlier modeling (orange): 22 parameters

* $\alpha$ = {1:0.3g} [{0:0.3g}, {2:0.3g}]

* $\beta$ = {4:0.3g} [{3:0.3g}, {5:0.3g}]

* number of outliers: ${6:d}$

""".format(*percs_brute.T.ravel(), n_outliers)

))

percs_smart = np.percentile(sample, [16, 50, 84], axis=0)

display(Markdown(r"""With outlier modeling (green): 3 parameters

* $\alpha$ = {1:0.3g} [{0:0.3g}, {2:0.3g}]

* $\beta$ = {4:0.3g} [{3:0.3g}, {5:0.3g}]

* $q$ = {7:0.3g} [{6:0.3g}, {8:0.3g}]

* number of outliers: ${9:d}$

""".format(*percs_smart.T.ravel(), n_outliers)

))The infered parameters are nearly identical but the convergence is much faster in the last approach.