Adapted by Aarya Patil from the notebook in Astro Hack Week 2020

# only necessary if you're running Python 2.7 or lower

from __future__ import print_function, division

from six.moves import range

# import matplotlib and define our alias

from matplotlib import pyplot as plt

# plot figures within the notebook rather than externally

%matplotlib inline

# numpy

import numpy as np

# scipy

import scipyOverview¶

Although linear regression appears simple at first glance, it actually has a surprising amount of depth and is applicable in a bunch of different domains. Most importantly, it provides an accessible way to get a handle on several big concepts in Bayesian Inference, including:

defining objective functions,

probabilities and likelihoods,

estimating parameters,

the concept of priors, and

marginalizing over uncertainties.

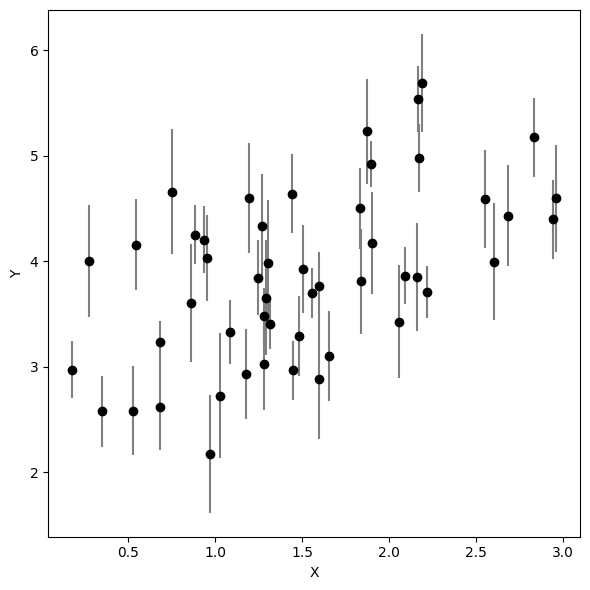

Data¶

Let’s generate some mock data below. Don’t worry about getting all the details here -- we will come back to them later as we explore how to fit a line better.

def gen_mock_data(seed=123, m=0.875, b=2.523, s=0.523, unc=[0.2, 0.6], N=50):

"""

Generate `N` mock data points from the line

with slope `m`, intercept `b`, and

intrinsic scatter `s` with measurement uncertainties

uniformly distributed between the values in `unc` using

a random number generator with the starting `seed`.

"""

rstate = np.random.RandomState(seed)

# generate synthetic data

x = np.sort(3. * rstate.rand(N)) # x values

y_int = m * x + b # underlying line

y = rstate.normal(y_int, s) # with intrinsic scatter

yerr = rstate.uniform(unc[0], unc[1], N) # measurement errors

yobs = rstate.normal(y, yerr)

return x, yobs, yerr# generate mock data

x, y, ye = gen_mock_data()# plot data

plt.figure(figsize=(6, 6))

plt.errorbar(x, y, yerr=ye, fmt="ko", ecolor='gray', capsize=0)

plt.xlabel('X')

plt.ylabel('Y')

plt.tight_layout()

Problem¶

Our starting goal here is going to be pretty simple: try to determine a simple linear relationship. In other words, we want a model like

where is the slope and is the -intercept. We will slowly make this model more complicated as we try and model additional features of the data.

“Chi by Eye”¶

To start, let’s ignore all attempts to make this quantitative. The human brain is actually quite good at fitting models to data, so we can do a “chi by eye” process (based on the “chi-squared” statistic we will define later) and just try and benchmark what looks like a reasonable fit.

In the cell below, just take a minute or two to see if you can find a “best guess” for the slope and intercept.

# best estimates of slope and intercept

m_1, b_1 = 0.4, 3.3

# plot data

plt.figure(figsize=(8, 6))

plt.errorbar(x, y, yerr=ye, fmt="ko", ecolor='gray',

capsize=0, label='Data')

plt.plot(x, m_1 * x + b_1, lw=4, alpha=0.7, color='red',

label='by eye')

plt.xlabel('x')

plt.ylabel('y')

plt.legend()

plt.tight_layout()

Your guess should look “reasonable”, but you might not feel so good about it. Why? Can you say why it’s the best fit? How about uncertainties on the slope and intercept?

Part of the difficulty here is that there is no quantitative metric for what makes a fit “good”. We will now try to rectify this problem.

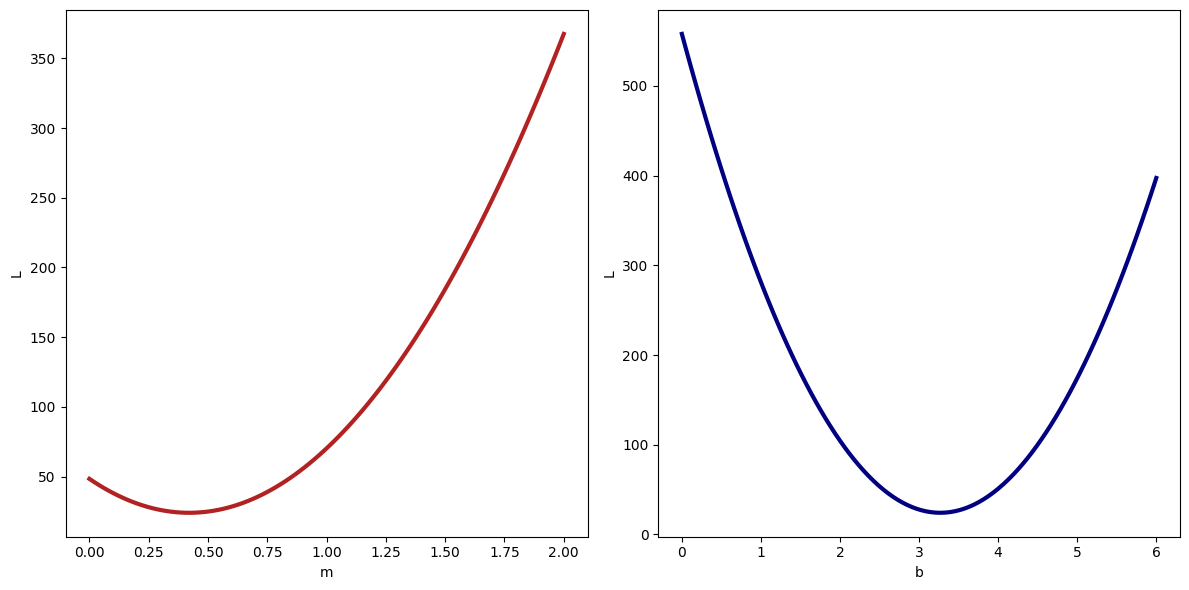

Objective (Loss) Function¶

One way to define whether a fit is any good is to see how well it actually predicts the data it is supposed to be fitting. One way to quantify this involves looking at the residuals

where indicates the th datapoint, is the prediction from the model and is the observed (noisy) value. We are interested in the absolute value because what probably matters is the total error, not whether it’s positive or negative. Note that we are currently ignoring the errors.

We would ideally like to have a model that has the smallest residuals for all the points. Let’s therefore define a loss function:

where the line now indicates “given” or “conditioned on”. In other words, what is the loss of a particular slope and intercept given the data ?

is a power that all residuals are raised to that control how sensitive the loss function is to large and small residuals. Common values for are often , with (squaring the residuals) being the most common.

Filling in the loss function defined below, see how changing the values of the slope and intercept change the loss. Then experiment with how different values of change the behavior you see.

# Loss function

def loss(theta, p=2., x=x, y=y):

"""A simple loss function as a function of parameters `theta`."""

m, b = theta # reassign parameters

ypred = m * x + b

resid = np.abs(ypred - y)

return np.sum(resid**p)# change m at fixed b

mgrid = np.linspace(0, 2, 100)

loss_m = np.array([loss([m, b_1], p=2.) for m in mgrid])

# change b at fixed m

bgrid = np.linspace(0,6, 100)

loss_b = np.array([loss([m_1, b], p=2.) for b in bgrid])

# plot results

plt.figure(figsize=(12, 6))

plt.subplot(1, 2, 1)

plt.plot(mgrid, loss_m, lw=3, color='firebrick')

plt.xlabel('m')

plt.ylabel('L')

plt.subplot(1, 2, 2)

plt.plot(bgrid, loss_b, lw=3, color='navy')

plt.xlabel('b')

plt.ylabel('L')

plt.tight_layout()

To find the best fit, we want to minimize our loss function.

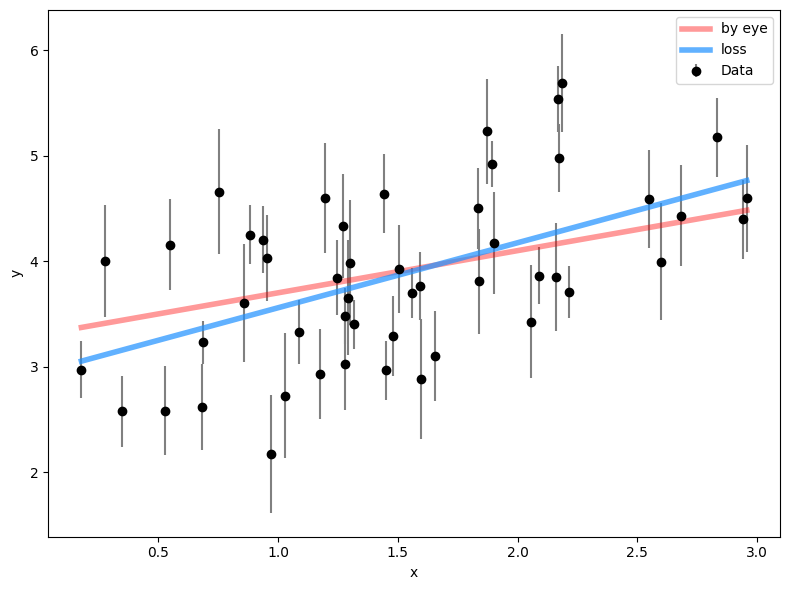

With help from the documentation, use the minimize function from scipy.optimize to get the best-fit parameters theta based on our loss function loss. Quantify the improvement between your initial guess and this new result based on the loss function values. Are they significant? Why or why not?

If you have extra time, explore how these change as you vary away from the default value.

from scipy.optimize import minimize

# minimize the loss function

results = minimize(loss, [m_1, b_1])

print(results) message: Optimization terminated successfully.

success: True

status: 0

fun: 22.952030384848015

x: [ 6.166e-01 2.942e+00]

nit: 4

jac: [ 4.768e-07 0.000e+00]

hess_inv: [[ 2.056e-02 -3.095e-02]

[-3.095e-02 5.660e-02]]

nfev: 18

njev: 6

# get best-fit result

m_2, b_2 = results['x']

# plot it against previous results

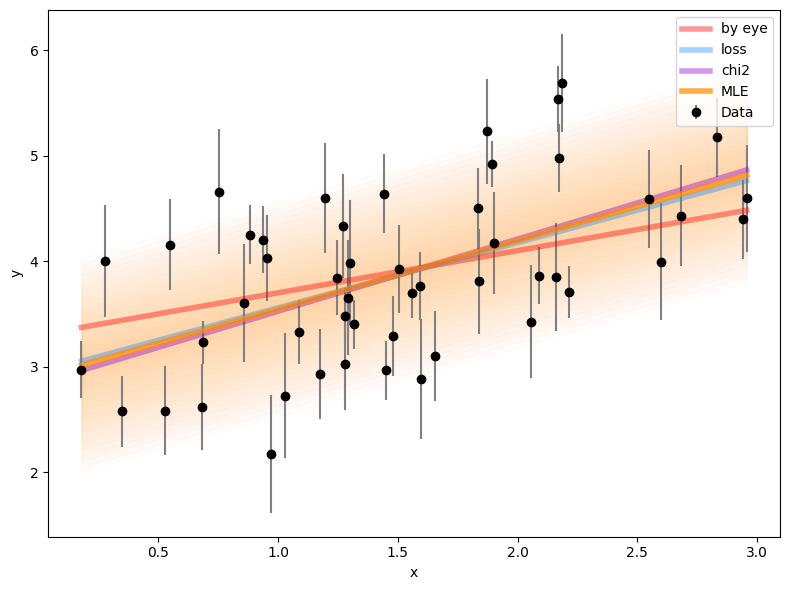

plt.figure(figsize=(8, 6))

plt.errorbar(x, y, yerr=ye, fmt="ko", ecolor='gray',

capsize=0, label='Data')

plt.plot(x, m_1 * x + b_1, lw=4, alpha=0.4, color='red',

label='by eye')

plt.plot(x, m_2 * x + b_2, lw=4, alpha=0.7, color='dodgerblue',

label='loss')

plt.xlabel('x')

plt.ylabel('y')

plt.legend()

plt.tight_layout()

# quantifying the improvement

print('Change in Loss:', loss([m_1, b_1]) - loss([m_2, b_2]))Change in Loss: 1.192922407460447

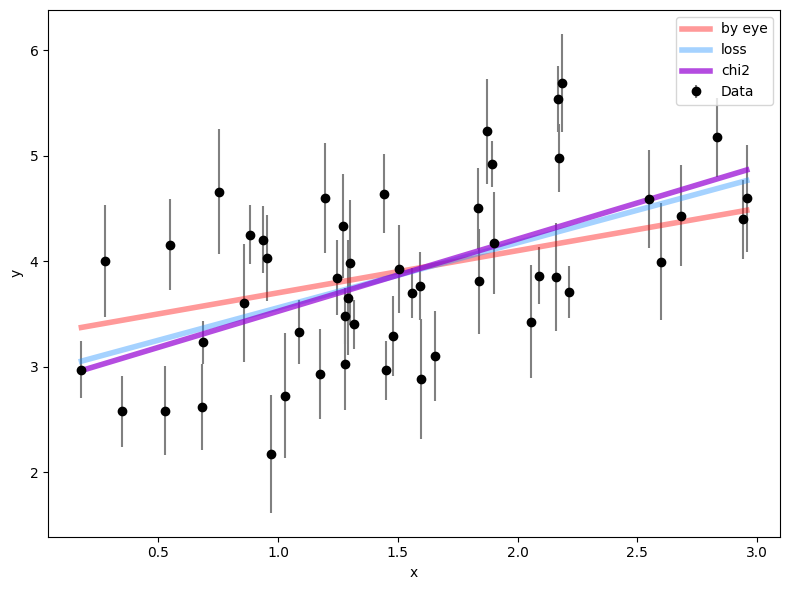

Incorporating Errors with Chi-Square¶

So far, we haven’t tried to incorporate errors into our linear regression model. We probably want the fit to take into account whether a point has large or small errors. Points with large errors probably should be “down-weighted” in the fit since they are more uncertain. We can accomplish this be defining the error-normalized resuidual :

where is the measurement error associated with . This should make some intuitive sense: we are just normalizing the residual by the error, so now we are measuring how discrepant the prediction is in units of the error bar for each point.

We can now define the chi-square statistic often used in the astronomical literature:

Following the example above, define a chi-square function and minimize it. Then quantify how much better the new best-fit model is compared to the previous two. Is this a substantial improvement over what we found earlier? Why or why not?

# chi2 function

def chi2(theta, x=x, y=y, ye=ye):

"""Chi-square as a function of parameters `theta`."""

m, b = theta # reassign parameters

ypred = m * x + b

resid = ypred - y

return np.sum(resid**2 / ye**2)# minimize chi2

results = minimize(chi2, [m_2, b_2])

print(results) message: Optimization terminated successfully.

success: True

status: 0

fun: 146.09510191676458

x: [ 6.848e-01 2.840e+00]

nit: 4

jac: [ 0.000e+00 -5.722e-06]

hess_inv: [[ 2.860e-03 -4.179e-03]

[-4.179e-03 7.404e-03]]

nfev: 18

njev: 6

# get best-fit result

m_3, b_3 = results['x']

# plot it against previous results

plt.figure(figsize=(8, 6))

plt.errorbar(x, y, yerr=ye, fmt="ko", ecolor='gray',

capsize=0, label='Data')

plt.plot(x, m_1 * x + b_1, lw=4, alpha=0.4, color='red',

label='by eye')

plt.plot(x, m_2 * x + b_2, lw=4, alpha=0.4, color='dodgerblue',

label='loss')

plt.plot(x, m_3 * x + b_3, lw=4, alpha=0.7, color='darkviolet',

label='chi2')

plt.xlabel('x')

plt.ylabel('y')

plt.legend()

plt.tight_layout()

# quantifying the improvement

print('Change in chi2:',

chi2([m_1, b_1]) - chi2([m_3, b_3]),

chi2([m_2, b_2]) - chi2([m_3, b_3]))Change in chi2: 14.923665349813092 0.8162875786571533

Defining a Model¶

We now want to consider an additional source of uncertainty: some amount of intrinsic scatter in the fitted relationship. In other words, in addition to the scatter caused by the observational uncertainties, we also want to add on an additional scatter that is the same for all points.

How might we do so? Well, maybe we could try something like

and then write down our model as before, but now as a function of , , and . Notice, however, that will always get smaller as increases, since that means the error-normalized residual gets smaller too. Whoops! Maybe we can add on a penalty to disfavor large values? But what would be an appropriate penalty? Maybe something like

could work, and we could argue for reasons why it might be reasonable. This basic result, however, reveals a fundamental problem with how we’re approached fitting a line so far: we haven’t actually defined an underlying model for the data.

Likelihoods¶

All models start with trying to understand the data generating process. In other words, if we wanted to simulate data based on an input set of parameters, how would we do so?

In the space below, write down the steps we would need to generate data with a given a slope , intercept , and scatter along with associated positions and uncertainties values. Don’t worry about the details -- just the large conceptual picture is enough.

Space intentionally left blank

Probability Density Functions¶

To do so, let’s start with the observed values. We have observational errors, but what do those mean? Well, in general it means we assume the observed data follow a Normal distribution (also called a “Gaussian”) such that the probability that is any particular value follows a probability density function of the form

Since our model for the data is , this then gives

Although it is beyond the scope of this notebook to prove, it can be shown that assuming that the intrinsic scatter is also Gaussian gives the modified result:

Combining Data¶

The probability of independent events , , and all occuring is just the product of their individual probabilities:

The same holds true for data points: if each data point is an independent observation, the total probability is just the individual probabilities for each data point multiplied together. This gives

Since the probabilities can get really small really fast, for numerical stability this is almost always re-written in logarithmic form:

Notice that, if we explicitly substitute in the Gaussian probability density function, we get this intriguing result:

The first term here looks a lot like our original expression, except now with the modified errors. And the second term now looks a lot like a penalty term that disfavors larger values! So we can see that by explicitly writing out a model, we naturally accomplish our original objective.

Maximum-Likelihood Estimate (MLE)¶

The “best-fit” parameters now can be defined as those that maximize the likelihood (or, equivalently, minimize the negative of the likelihood).

Write down a function that computes the (negative) log-likelihood defined above and minimize it. Quantify the difference between these maximum-likelihood results and those derived earlier from the best-fit . Does the model favor adding in this additional scatter? Why or why not?

def negloglike(theta, x=x, y=y, ye=ye):

"""(Negative) log-likelihood as a function of parameters `theta`."""

m, b, s = theta # reassign parameters

ypred = m * x + b

resid = ypred - y

chi2 = resid**2 / (ye**2 + s**2)

const = np.log(2 * np.pi * (ye**2 + s**2))

logl = -0.5 * np.sum(chi2 + const)

return -logl# minimize negative log-likelihood

results = minimize(negloglike, [m_3, b_3, 0.5])

print(results) message: Optimization terminated successfully.

success: True

status: 0

fun: 50.47672158309011

x: [ 6.486e-01 2.897e+00 5.095e-01]

nit: 6

jac: [ 4.768e-06 2.861e-06 4.768e-07]

hess_inv: [[ 1.737e-02 -2.594e-02 -2.702e-04]

[-2.594e-02 4.723e-02 3.457e-04]

[-2.702e-04 3.457e-04 6.753e-03]]

nfev: 32

njev: 8

# get best-fit result

m_4, b_4, s_4 = results['x']

# plot it against previous results

plt.figure(figsize=(8, 6))

plt.errorbar(x, y, yerr=ye, fmt="ko", ecolor='gray',

capsize=0, label='Data')

plt.plot(x, m_1 * x + b_1, lw=4, alpha=0.4, color='red',

label='by eye')

plt.plot(x, m_2 * x + b_2, lw=4, alpha=0.4, color='dodgerblue',

label='loss')

plt.plot(x, m_3 * x + b_3, lw=4, alpha=0.4, color='darkviolet',

label='chi2')

plt.plot(x, m_4 * x + b_4, lw=4, alpha=0.7, color='darkorange',

label='MLE')

[plt.fill_between(x, m_4 * x + b_4 + s_4 * n, m_4 * x + b_4 - s_4 * n,

alpha=0.02, color='darkorange')

for n in np.linspace(0, 2, 20)]

plt.xlabel('x')

plt.ylabel('y')

plt.legend()

plt.tight_layout()

# quantifying the improvement

print('Change in loglike:',

negloglike([m_3, b_3, 0]) - negloglike([m_4, b_4, s_4]))Change in loglike: 22.117531960789478

Posteriors¶

We now have a working model that can describe the underlying probabilistic processes that generate the data. However, we’re still missing one small thing. Notice that, in our earlier notation,

describes the probability of given (i.e. conditioned on) . So our likelihood

describes something similar: the probability of seeing the observed data given the underlying model parameters , , and along with the and values. What we want, however, is this expression:

This now describes the probability of our model parameters given the data.

Getting at this distribution requires exploiting the fact that probabilities can factor. This allows us to rewrite:

If we replace and , we are left with what’s known as Bayes’ Theorem:

Pulling apart this expression:

The term , is the likelihood that we have been working with already and describes the probability of seeing the data given the model.

The term is known as the prior: it characterizes our prior knowledge about the parameters is question without seeing the data.

The term is known as the evidence (or marginal likelihood). Since this is just a constant, most often we can just ignore it.

Finally, the left-hand side is known as the posterior since it combines the prior and the likelihood together.

Priors¶

A central component of Bayesian inference is the prior, which describes our prior knowledge of the parameters. This is where all of the power (and drawbacks) of Bayesian inference comes from, since the priors can dramatically affect the analysis.

As an example, consider priors on the components that are independent and Gaussian, analagous to our data. If we wrote out the new log-posterior, we would then get:

where is again a constant that we can ignore. We can see the priors correspond to additional penalty terms on the parameters that function like additional data points.

Maximum-a-Posteriori (MAP) Estimate¶

Finally, we can now find our parameter estimates with the highest log-posterior values, known as the maximum-a-posteriori (MAP) estimate.

Using the functional form above, define a Gaussian log-prior over each of the three parameters by specifying a given mean and standard deviation. Then, minimize the (negative) log-posterior.

prior_means = np.array([1., 3., 0.5]) # m, b, s

prior_stds = np.array([0.25, 0.5, 0.15]) # m, b, s

def neglogprior(theta, mean=prior_means, std=prior_stds):

"""(Negative) log-prior as a function of parameters `theta`."""

chi2 = (theta - mean)**2 / std**2

const = np.log(2. * np.pi * std**2)

logp = -0.5 * np.sum(chi2 + const)

return -logp

def neglogpost(theta, x=x, y=y, ye=ye, mean=prior_means, std=prior_stds):

"""(Negative) log-posterior as a function of parameters `theta`."""

m, b, s = theta # reassign parameters

logp = -neglogprior(theta, mean=mean, std=std)

logl = -negloglike(theta, x=x, y=y, ye=ye)

return -(logl + logp)# minimize negative log-likelihood

results = minimize(neglogpost, [m_4, b_4, s_4])

print(results) message: Optimization terminated successfully.

success: True

status: 0

fun: 50.11148436191087

x: [ 7.103e-01 2.811e+00 5.075e-01]

nit: 8

jac: [ 0.000e+00 9.537e-07 -1.431e-06]

hess_inv: [[ 1.221e-02 -1.761e-02 2.954e-04]

[-1.761e-02 3.354e-02 -3.595e-04]

[ 2.954e-04 -3.595e-04 5.239e-03]]

nfev: 44

njev: 11

# get best-fit result

m_5, b_5, s_5 = results['x']

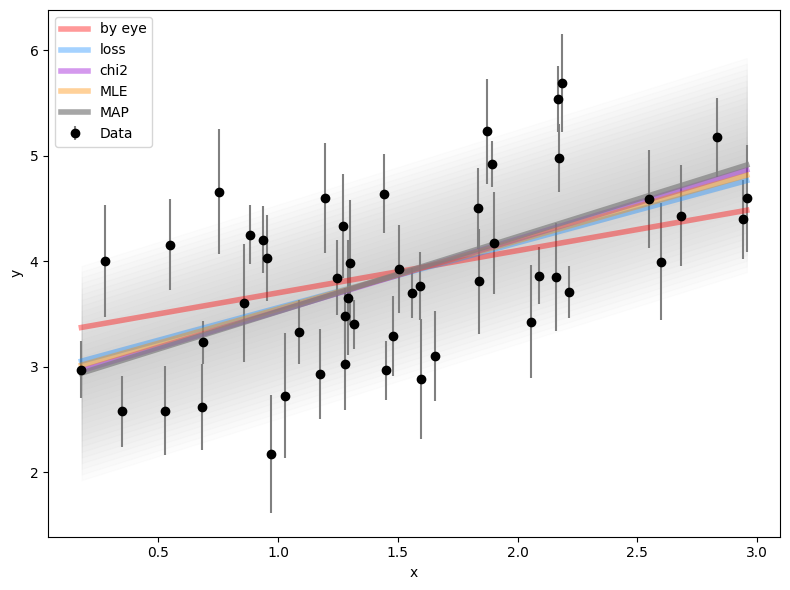

# plot it against previous results

plt.figure(figsize=(8, 6))

plt.errorbar(x, y, yerr=ye, fmt="ko", ecolor='gray',

capsize=0, label='Data')

plt.plot(x, m_1 * x + b_1, lw=4, alpha=0.4, color='red',

label='by eye')

plt.plot(x, m_2 * x + b_2, lw=4, alpha=0.4, color='dodgerblue',

label='loss')

plt.plot(x, m_3 * x + b_3, lw=4, alpha=0.4, color='darkviolet',

label='chi2')

plt.plot(x, m_4 * x + b_4, lw=4, alpha=0.4, color='darkorange',

label='MLE')

plt.plot(x, m_5 * x + b_5, lw=4, alpha=0.7, color='gray',

label='MAP')

[plt.fill_between(x, m_5 * x + b_5 + s_5 * n, m_5 * x + b_5 - s_5 * n,

alpha=0.02, color='gray')

for n in np.linspace(0, 2, 20)]

plt.xlabel('x')

plt.ylabel('y')

plt.legend()

plt.tight_layout()

Parameter Uncertainties¶

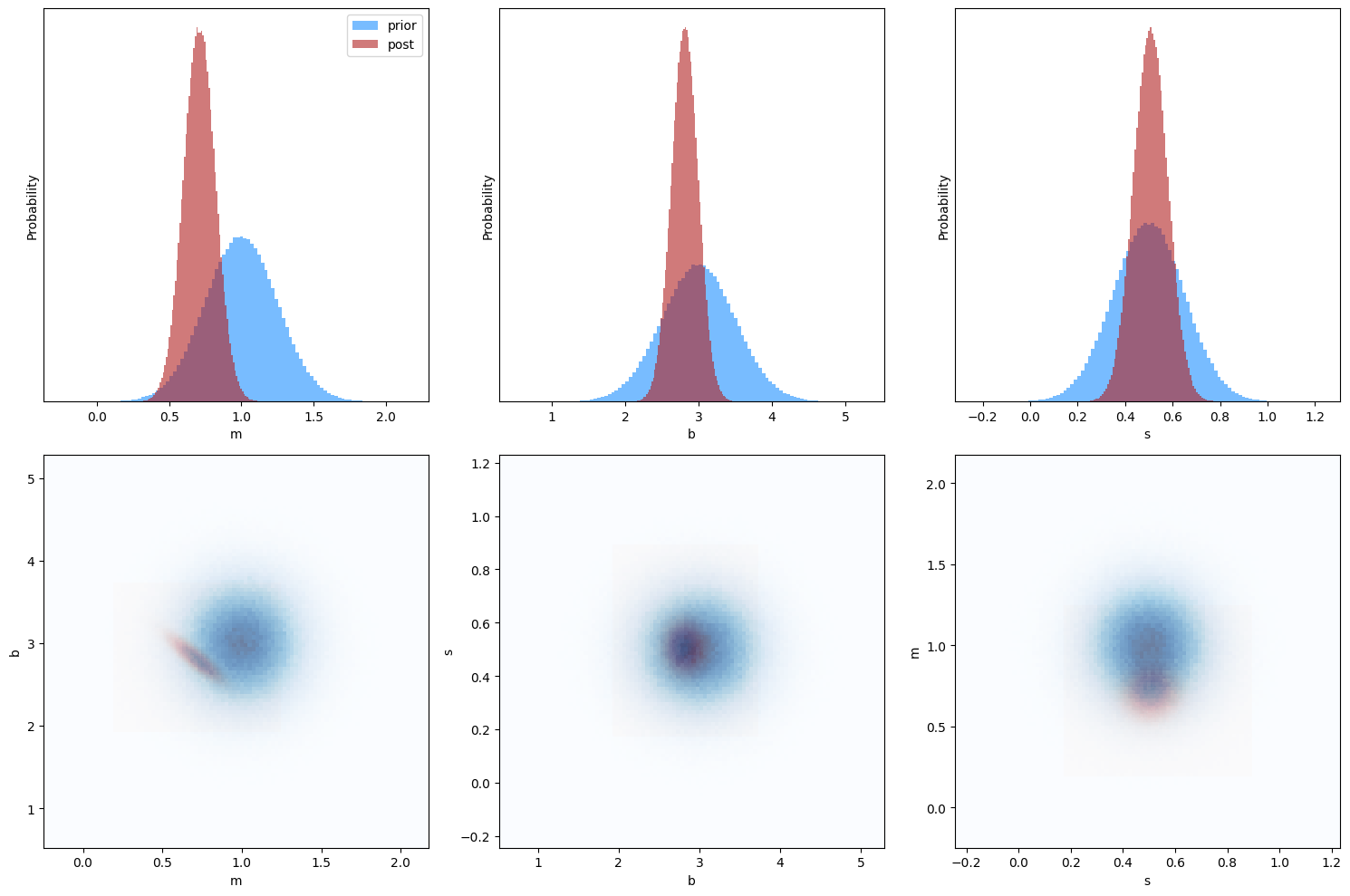

In addition to just providing a best-fit solution, the posterior distribution now quantifies just how much more likely one model is than another. While exploring this fully is beyond the scope of this introduction, one neat result of using a properly defined probability as the function to be minimize is that the minimization routine provides estimates of the parameter errors via the hess_inv item in the output results dictionary. This allows us to visualize how our final posterior distribution compares to our input priors, which is shown below.

Using the plots below, explore how changing the priors impacts the inferred solution. At what point do the best-fit values and errors become “prior-dominated” (prior largely determines the results)? At what point do they become “likelihood-dominated” (prior has little impact on the result)?

n = int(1e6)

# generate prior samples

m_prior, b_prior, s_prior = np.random.multivariate_normal(prior_means,

np.diag(prior_stds**2),

size=n).T

# generate posterior samples

m_post, b_post, s_post = np.random.multivariate_normal(results['x'],

results['hess_inv'],

size=n).T

# generate 1-D histograms of priors vs posteriors

plt.figure(figsize=(15, 10))

plt.subplot(2, 3, 1)

plt.hist(m_prior, 100, density=True, label='prior',

color='dodgerblue', alpha=0.6)

plt.hist(m_post, 100, density=True, label='post',

color='firebrick', alpha=0.6)

plt.xlabel('m')

plt.ylabel('Probability')

plt.yticks([])

plt.legend()

plt.subplot(2, 3, 2)

plt.hist(b_prior, 100, density=True, label='prior',

color='dodgerblue', alpha=0.6)

plt.hist(b_post, 100, density=True, label='post',

color='firebrick', alpha=0.6)

plt.xlabel('b')

plt.ylabel('Probability')

plt.yticks([])

plt.subplot(2, 3, 3)

plt.hist(s_prior, 100, density=True, label='prior',

color='dodgerblue', alpha=0.6)

plt.hist(s_post, 100, density=True, label='post',

color='firebrick', alpha=0.6)

plt.xlabel('s')

plt.ylabel('Probability')

plt.yticks([])

plt.tight_layout()

# generate 2-D histograms of posteriors

plt.subplot(2, 3, 4)

plt.hist2d(m_post, b_post, 100,

cmap='Reds', alpha=0.6)

plt.hist2d(m_prior, b_prior, 100,

cmap='Blues', alpha=0.6)

plt.xlabel('m')

plt.ylabel('b')

plt.subplot(2, 3, 5)

plt.hist2d(b_post, s_post, 100,

cmap='Reds', alpha=0.6)

plt.hist2d(b_prior, s_prior, 100,

cmap='Blues', alpha=0.6)

plt.xlabel('b')

plt.ylabel('s')

plt.subplot(2, 3, 6)

plt.hist2d(s_post, m_post, 100,

cmap='Reds', alpha=0.6)

plt.hist2d(s_prior, m_prior, 100,

cmap='Blues', alpha=0.6)

plt.xlabel('s')

plt.ylabel('m')

plt.tight_layout()

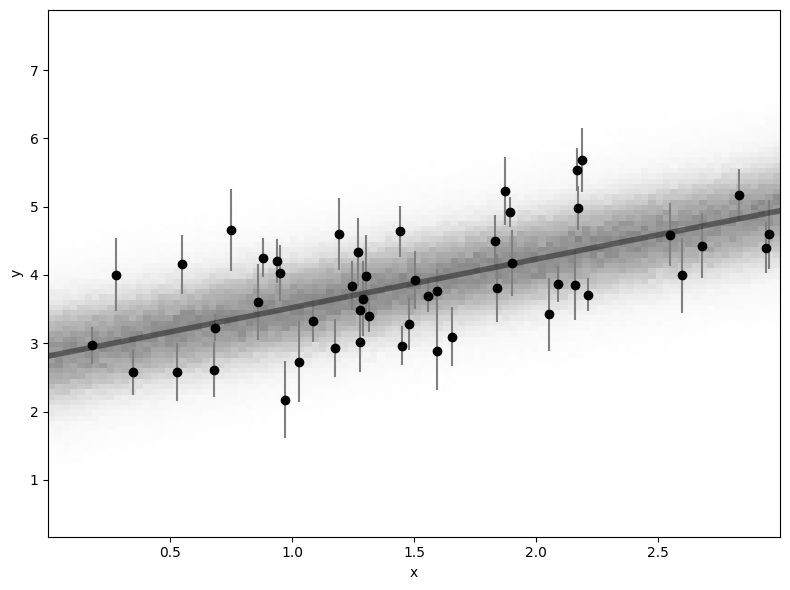

One additional way to view the results is to generate the posterior predictive. This just means to actually see what the different fits look like overplotted on top of the data (i.e. the predictions marginalized over the parameter uncertainties). If you have extra time, try visualizing this uncertainty by overplotting the parameters generated above over the data points.

# plot posterior predictive

plt.figure(figsize=(8, 6))

plt.errorbar(x, y, yerr=ye, fmt="ko", ecolor='gray',

capsize=0, label='Data')

xs = np.sort(np.random.uniform(0, 3, n))

ys = np.random.normal(m_post * xs + b_post, s_post)

plt.hist2d(xs, ys, 100, cmap='Greys', alpha=0.5)

plt.plot(xs, m_5 * xs + b_5, lw=4, alpha=0.4, color='black',

label='MAP')

plt.xlabel('x')

plt.ylabel('y')

plt.tight_layout()

Model Comparison¶

One way to compare models is by computing the Bayesian Information Criterion (BIC) as done below. Note that p is the number of parameters in the model, n is the number of observations, and the last term is where is the MLE of .

p = 3

BIC = p * np.log(n) + 2*negloglike([m_4, b_4, s_4])Try other ways of comparing models below: